Introduction

Have you ever ever puzzled how good it will be to talk with a video? As a weblog particular person myself, it usually bores me to look at an hour-long video to seek out related info. Typically it seems like a job to look at a video to get any helpful info out of it. So, I constructed a chatbot that permits you to chat with YouTube movies or any video. This was made attainable by GPT-3.5-turbo, Langchain, ChromaDB, Whisper, and Gradio. So, on this article, I’ll do a code walk-through of constructing a purposeful chatbot for YouTube movies with Langchain.

Studying Aims

- Construct the online interface utilizing Gradio

- Deal with YouTube movies and extract textual information from them utilizing Whisper

- Course of and format texts appropriately

- Create embeddings of textual content information

- Configure Chroma DB to retailer information

- Initialize a Langchain dialog chain with OpenAI chatGPT, ChromaDB, and embeddings operate

- Lastly, querying and streaming solutions to the Gradio chatbot

Earlier than attending to the coding half, let’s get familiarized with the instruments and applied sciences we are going to use.

This text was printed as part of the Information Science Blogathon.

Langchain

The Langchain is an open-source software written in Python that makes Giant Language Fashions information conscious and agentic. So, what does that even imply? Many of the commercially obtainable LLMs, corresponding to GPT-3.5 and GPT-4, have a restrict on the information they’re skilled on. For instance, ChatGPT can solely reply questions that it has already seen. Something after September 2021 is unknown to it. That is the core difficulty that Langchain solves. Be it a Phrase doc or any private PDF, we will feed the information to an LLM and get a human-like response. It has wrappers for instruments like Vector DBs, Chat fashions, and embedding capabilities, which make it simple to construct an AI utility utilizing simply Langchain.

Langchain additionally permits us to construct Brokers – LLM bots. These autonomous brokers could be configured for a number of duties, together with information evaluation, SQL querying, and even writing fundamental codes. There are quite a lot of issues we will automate utilizing these brokers. That is useful as we will outsource low-level data work to an LLM, saving us time and power.

On this venture, we are going to use Langchain instruments to construct a chat app for movies. For extra info concerning Langchain, go to their official web site.

Whisper

Whisper is one other progeny of OpenAI. It’s a general-purpose speech-to-text mannequin that may convert audio or movies into textual content. It’s skilled on a considerable amount of various audio to carry out multi-lingual translation, speech recognition, and classification.

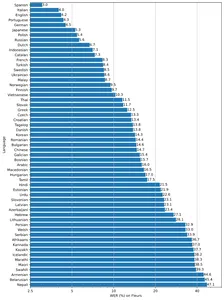

The mannequin is out there in 5 totally different sizes tiny, base, medium, small, and enormous, with pace and accuracy tradeoffs. The efficiency of fashions additionally is dependent upon the language. The determine under reveals a WER (Phrase Error Fee) breakdown by languages of Fleur’s dataset utilizing the large-v2 mannequin.

Vector Databases

Most machine studying algorithms can not course of uncooked unstructured information like pictures, audio, video, and texts. They should be transformed into matrices of vector embeddings. These vector embeddings characterize the mentioned information in a multi-dimensional airplane. To get embeddings, we want extremely environment friendly deep-learning fashions able to capturing the semantic that means of knowledge. That is extremely essential for making any AI app. To retailer and question this information, we want databases able to dealing with them successfully. This resulted within the creation of specialised databases referred to as vector databases. There are a number of open-source databases are there. Chroma, Milvus, Weaviate, and FAISS are a few of the hottest.

One other USP of vector shops is that we will carry out high-speed search operations on unstructured information. As soon as we get the embeddings, we will use them for clustering, looking out, sorting, and classification. As the information factors are in a vector area, we will calculate the gap between them to know the way intently they’re associated. A number of algorithms like Cosine Similarity, Euclidean Distance, KNN, and ANN (Approximate Nearest Neighbour) are used to seek out comparable information factors.

We’ll use Chroma vector retailer – an open-source vector database. Chroma additionally has Langchain integration, which can are available in very helpful.

Gradio

The fourth horseman of our app Gradio is an open-source library to share machine studying fashions simply. It could additionally assist construct demo internet apps with its elements and occasions with Python.

If you’re unfamiliar with Gradio and Langchain, learn the next articles earlier than shifting forward.

Let’s now begin constructing it.

Setup Dev Env

To arrange the event surroundings, create a Python digital surroundings or create an area dev surroundings with Docker.

Now set up all these dependencies

pytube==15.0.0

gradio == 3.27.0

openai == 0.27.4

langchain == 0.0.148

chromadb == 0.3.21

tiktoken == 0.3.3

openai-whisper==20230314

Import Libraries

import os

import tempfile

import whisper

import datetime as dt

import gradio as gr

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

from langchain.chat_models import ChatOpenAI

from langchain.chains import ConversationalRetrievalChain

from pytube import YouTube

from typing import TYPE_CHECKING, Any, Generator, Checklist

Create Internet Interface

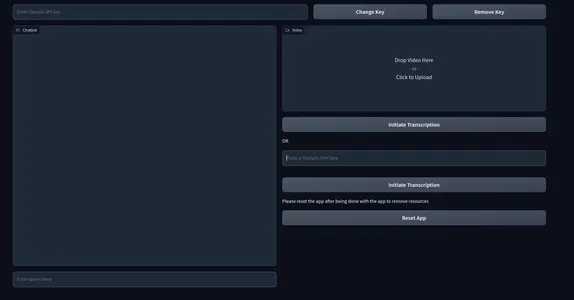

We’ll use Gradio Block and elements to construct the entrance finish of our utility. So, right here’s how one can make the interface. Be happy to customise as you see match.

with gr.Blocks() as demo:

with gr.Row():

# with gr.Group():

with gr.Column(scale=0.70):

api_key = gr.Textbox(placeholder="Enter OpenAI API key",

show_label=False, interactive=True).model(container=False)

with gr.Column(scale=0.15):

change_api_key = gr.Button('Change Key')

with gr.Column(scale=0.15):

remove_key = gr.Button('Take away Key')

with gr.Row():

with gr.Column():

chatbot = gr.Chatbot(worth=[]).model(top=650)

question = gr.Textbox(placeholder="Enter question right here",

show_label=False).model(container=False)

with gr.Column():

video = gr.Video(interactive=True,)

start_video = gr.Button('Provoke Transcription')

gr.HTML('OR')

yt_link = gr.Textbox(placeholder="Paste a YouTube hyperlink right here",

show_label=False).model(container=False)

yt_video = gr.HTML(label=True)

start_ytvideo = gr.Button('Provoke Transcription')

gr.HTML('Please reset the app after being finished with the app to take away assets')

reset = gr.Button('Reset App')

if __name__ == "__main__":

demo.launch() The interface will appear as if this

Right here, we’ve a textbox that takes the OpenAI key as enter. And likewise two keys for altering the API key and deleting the important thing. We even have a chat UI on the left and a field for rendering native movies on the best. Instantly under the video field, we’ve a field asking for a YouTube hyperlink and buttons that say “Provoke Transcription.”

Gradio Occasions

Now we are going to outline occasions to make the app interactive. Add the under codes on the finish of the gr.Blocks().

start_video.click on(fn=lambda :(pause, update_yt),

outputs=[start2, yt_video]).then(

fn=embed_video, inputs=,

outputs=).success(

fn=lambda:resume,

outputs=[start2])

start_ytvideo.click on(fn=lambda :(pause, update_video),

outputs=[start1,video]).then(

fn=embed_yt, inputs=[yt_link],

outputs = [yt_video, chatbot]).success(

fn=lambda:resume, outputs=[start1])

question.submit(fn=add_text, inputs=[chatbot, query],

outputs=[chatbot]).success(

fn=QuestionAnswer,

inputs=[chatbot,query,yt_link,video],

outputs=[chatbot,query])

api_key.submit(fn=set_apikey, inputs=api_key, outputs=api_key)

change_api_key.click on(fn=enable_api_box, outputs=api_key)

remove_key.click on(fn = remove_key_box, outputs=api_key)

reset.click on(fn = reset_vars, outputs=[chatbot,query, video, yt_video, ])- start_video: When clicked will set off the method of getting texts from the video and create a Conversational chain.

- start_ytvideo: When clicked will do the identical however now from the YouTube video, and when accomplished will render the YouTube video slightly below it.

- question: Chargeable for streaming response from LLM to the chat UI.

The remainder of the occasions are for dealing with the API key and resetting the app.

We’ve outlined the occasions however haven’t outlined the capabilities accountable for triggering occasions.

Backend

To not make it difficult and messy, we are going to define the processes we will probably be coping with within the backend.

- Deal with API keys.

- Deal with Uploaded video.

- Transcribe movies to get texts.

- Create chunks out of video texts.

- Create embeddings from texts.

- Retailer vector embeddings within the ChromaDB vector retailer.

- Create a Conversational Retrieval chain with Langchain.

- Ship related paperwork to the OpenAI chat mannequin (gpt-3.5-turbo).

- Fetch the reply and stream it on chat UI.

We will probably be doing all these items together with just a few exception dealing with.

Outline just a few surroundings variables.

chat_history = []

outcome = None

chain = None

run_once_flag = False

call_to_load_video = 0

enable_box = gr.Textbox.replace(worth=None,placeholder="Add your OpenAI API key",

interactive=True)

disable_box = gr.Textbox.replace(worth="OpenAI API secret is Set",

interactive=False)

remove_box = gr.Textbox.replace(worth="Your API key efficiently eliminated",

interactive=False)

pause = gr.Button.replace(interactive=False)

resume = gr.Button.replace(interactive=True)

update_video = gr.Video.replace(worth = None)

update_yt = gr.HTML.replace(worth=None) Deal with API Keys

When a consumer submits a key, it will get set because the surroundings variable, and we may also disable the textbox from additional enter. Urgent the change key will make it mutable once more. Clicking the take away key will take away the important thing.

enable_box = gr.Textbox.replace(worth=None,placeholder="Add your OpenAI API key",

interactive=True)

disable_box = gr.Textbox.replace(worth="OpenAI API secret is Set",interactive=False)

remove_box = gr.Textbox.replace(worth="Your API key efficiently eliminated",

interactive=False)

def set_apikey(api_key):

os.environ['OPENAI_API_KEY'] = api_key

return disable_box

def enable_api_box():

return enable_box

def remove_key_box():

os.environ['OPENAI_API_KEY'] = ''

return remove_boxDeal with Movies

Subsequent up, we will probably be coping with uploaded movies and YouTube hyperlinks. We could have two totally different capabilities coping with every case. For YouTube hyperlinks, we are going to create an iframe embed hyperlink. For every case, we are going to name one other operate make_chain() accountable for creating chains.

These capabilities are triggered when somebody uploads a video or gives a YouTube hyperlink and presses transcribe button.

def embed_yt(yt_link: str):

# This operate embeds a YouTube video into the web page.

# Test if the YouTube hyperlink is legitimate.

if not yt_link:

increase gr.Error('Paste a YouTube hyperlink')

# Set the worldwide variable `run_once_flag` to False.

# That is used to stop the operate from being referred to as greater than as soon as.

run_once_flag = False

# Set the worldwide variable `call_to_load_video` to 0.

# That is used to maintain observe of what number of instances the operate has been referred to as.

call_to_load_video = 0

# Create a series utilizing the YouTube hyperlink.

make_chain(url=yt_link)

# Get the URL of the YouTube video.

url = yt_link.substitute('watch?v=', '/embed/')

# Create the HTML code for the embedded YouTube video.

embed_html = f"""<iframe width="750" top="315" src="https://www.analyticsvidhya.com/weblog/2023/06/build-a-chatgpt-for-youtube-videos-with-langchain/{url}"

title="YouTube video participant" frameborder="0"

permit="accelerometer; autoplay; clipboard-write;

encrypted-media; gyroscope; picture-in-picture"

allowfullscreen></iframe>"""

# Return the HTML code and an empty checklist.

return embed_html, []

def embed_video(video=str | None):

# This operate embeds a video into the web page.

# Test if the video is legitimate.

if not video:

increase gr.Error('Add a Video')

# Set the worldwide variable `run_once_flag` to False.

# That is used to stop the operate from being referred to as greater than as soon as.

run_once_flag = False

# Create a series utilizing the video.

make_chain(video=video)

# Return the video and an empty checklist.

return video, []Create Chain

This is likely one of the most essential steps of all. This includes making a Chroma vector retailer and Langchain chain. We’ll use a Conversational retrieval chain for our use case. We’ll use OpenAI embeddings, however for precise deployments, use any free embedding fashions like Huggingface sentence encoders, and so on.

def make_chain(url=None, video=None) -> (ConversationalRetrievalChain | Any | None):

international chain, run_once_flag

# Test if a YouTube hyperlink or video is offered

if not url and never video:

increase gr.Error('Please present a YouTube hyperlink or Add a video')

if not run_once_flag:

run_once_flag = True

# Get the title from the YouTube hyperlink or video

title = get_title(url, video).substitute(' ','-')

# Course of the textual content from the video

grouped_texts, time_list = process_text(url=url) if url else process_text(video=video)

# Convert time_list to metadata format

time_list = [{'source': str(t.time())} for t in time_list]

# Create vector shops from the processed texts with metadata

vector_stores = Chroma.from_texts(texts=grouped_texts, collection_name="take a look at",

embedding=OpenAIEmbeddings(),

metadatas=time_list)

# Create a ConversationalRetrievalChain from the vector shops

chain = ConversationalRetrievalChain.from_llm(ChatOpenAI(temperature=0.0),

retriever=

vector_stores.as_retriever(

search_kwargs={"ok": 5}),

return_source_documents=True)

return chain

- Get texts and metadata from both YouTube URL or video file.

- Create a Chroma vector retailer from texts and metadata.

- Construct a series utilizing OpenAI gpt-3.5-turbo and chroma vector retailer.

- Return chain.

Course of Texts

On this step, we are going to do applicable slicing of texts from movies and likewise create the metadata object we used within the above chain-building course of.

def process_text(video=None, url=None) -> tuple[list, list[dt.datetime]]:

international call_to_load_video

if call_to_load_video == 0:

print('sure')

# Name the process_video operate primarily based on the given video or URL

outcome = process_video(url=url) if url else process_video(video=video)

call_to_load_video += 1

texts, start_time_list = [], []

# Extract textual content and begin time from every phase within the outcome

for res in outcome['segments']:

begin = res['start']

textual content = res['text']

start_time = dt.datetime.fromtimestamp(begin)

start_time_formatted = start_time.strftime("%H:%M:%S")

texts.append(''.be part of(textual content))

start_time_list.append(start_time_formatted)

texts_with_timestamps = dict(zip(texts, start_time_list))

# Convert the timestamp strings to datetime objects

formatted_texts = {

textual content: dt.datetime.strptime(str(timestamp), '%H:%M:%S')

for textual content, timestamp in texts_with_timestamps.gadgets()

}

grouped_texts = []

current_group = ''

time_list = [list(formatted_texts.values())[0]]

previous_time = None

time_difference = dt.timedelta(seconds=30)

# Group texts primarily based on time distinction

for textual content, timestamp in formatted_texts.gadgets():

if previous_time is None or timestamp - previous_time <= time_difference:

current_group += textual content

else:

grouped_texts.append(current_group)

time_list.append(timestamp)

current_group = textual content

previous_time = time_list[-1]

# Append the final group of texts

if current_group:

grouped_texts.append(current_group)

return grouped_texts, time_list

- The process_text operate takes both a URL or a Video path. This video is then transcribed within the process_video operate, and we get the ultimate texts.

- We then get the beginning time of every sentence (from Whisper) and group them in 30 seconds.

- We lastly return the grouped texts and beginning time of every group.

Course of Video

On this step, we transcribe video or audio recordsdata and get texts. We’ll use the Whisper base mannequin for transcription.

def process_video(video=None, url=None) -> dict[str, str | list]:

if url:

file_dir = load_video(url)

else:

file_dir = video

print('Transcribing Video with whisper base mannequin')

mannequin = whisper.load_model("base")

outcome = mannequin.transcribe(file_dir)

return outcomeFor YouTube movies, as we can not straight course of them, we should deal with them individually. We’ll use a library referred to as Pytube to obtain the audio or video of the YouTube video. So, right here’s how you are able to do it.

def load_video(url: str) -> str:

# This operate downloads a YouTube video and returns the trail to the downloaded file.

# Create a YouTube object for the given URL.

yt = YouTube(url)

# Get the goal listing.

target_dir = os.path.be part of('/tmp', 'Youtube')

# If the goal listing doesn't exist, create it.

if not os.path.exists(target_dir):

os.mkdir(target_dir)

# Get the audio stream of the video.

stream = yt.streams.get_audio_only()

# Obtain the audio stream to the goal listing.

print('----DOWNLOADING AUDIO FILE----')

stream.obtain(output_path=target_dir)

# Get the trail of the downloaded file.

path = target_dir + '/' + yt.title + '.mp4'

# Return the trail of the downloaded file.

return path

- Create a YouTube object for the given URL.

- Create a short lived goal listing path

- Test if the trail exists else create the listing

- Obtain the audio of the file.

- Get the trail listing of the video

This was the bottom-up course of from getting texts from movies to creating the chain. Now, all that continues to be is configuring the chatbot.

Configure Chatbot

All we want now’s to ship a question and a chat_history to it to fetch our solutions. So, we are going to outline a operate that solely triggers when a question is submitted.

def add_text(historical past, textual content):

if not textual content:

increase gr.Error('enter textual content')

historical past = historical past + [(text,'')]

return historical past

def QuestionAnswer(historical past, question=None, url=None, video=None) -> Generator[Any | None, Any, None]:

# This operate solutions a query utilizing a series of fashions.

# Test if a YouTube hyperlink or an area video file is offered.

if video and url:

# Increase an error if each a YouTube hyperlink and an area video file are offered.

increase gr.Error('Add a video or a YouTube hyperlink, not each')

elif not url and never video:

# Increase an error if no enter is offered.

increase gr.Error('Present a YouTube hyperlink or Add a video')

# Get the results of processing the video.

outcome = chain({"query": question, 'chat_history': chat_history}, return_only_outputs=True)

# Add the query and reply to the chat historical past.

chat_history += [(query, result["answer"])]

# For every character within the reply, append it to the final aspect of the historical past.

for char in outcome['answer']:

historical past[-1][-1] += char

yield historical past, ''

We offer the chat historical past with the question to maintain the context of the dialog. Lastly, we stream the reply again to the chatbot. And don’t neglect to outline the reset performance to reset all of the values.

So, this was all about it. Now, launch your utility and begin chatting with movies.

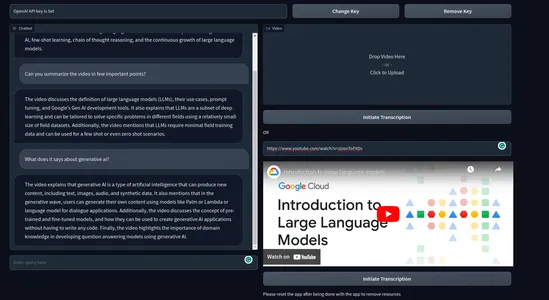

That is how the ultimate product seems

Video Demo:

Actual-life Use instances

An utility that lets end-user chat with any video or audio can have a variety of use instances. Listed below are a few of the real-life use instances of this chatbot.

- Schooling: College students usually undergo hours-long video lectures. This chatbot can help college students in studying from lecture movies and extract helpful info rapidly, saving time and power. This may considerably enhance the training expertise.

- Authorized: Legislation professionals usually undergo prolonged authorized proceedings and depositions to investigate the case, put together paperwork, analysis, or compliance monitoring. A chatbot like this will go a good distance in decluttering such duties.

- Content material Summarization: This app can analyze video content material and generate summarized textual content variations. This lets the consumer grasp highlights of the video with out watching it fully.

- Buyer Interplay: Manufacturers can incorporate a video chatbot function for his or her services or products. This may be useful for companies that promote services or products which are high-ticket or that require quite a lot of rationalization.

- Video Translation: We will translate the textual content corpus to different languages. This could facilitate cross-lingual communication, language studying, or accessibility for non-native audio system.

These are a few of the potential use instances I may consider. There can have much more helpful functions of a chatbot for movies.

Conclusion

So, this was all about constructing a purposeful demo internet app for a chatbot for movies. We coated quite a lot of ideas all through the article. Listed below are the important thing takeaways from the article.

- We realized about Langchain – a preferred software for creating AI functions with ease.

- Whisper is a potent speech-to-text mannequin by OpenAI. An open-source mannequin that may convert audio and movies to textual content.

- We realized how vector databases facilitate the efficient storing and querying of vector embeddings.

- We constructed a very purposeful internet app from scratch utilizing Langchain, Chroma, and OpenAI fashions.

- We additionally mentioned potential real-life use instances of our chatbot.

This was all about it hope you appreciated it, and do contemplate following me on Twitter for extra issues associated to improvement.

GitHub Repository: sunilkumardash9/chatgpt-for-videos. When you discover this useful, do ⭐ the repository.

Continuously Requested Questions

A. LangChain is an open-source framework that simplifies the creation of functions utilizing massive language fashions. It may be used for quite a lot of duties, together with chatbots, doc evaluation, code evaluation, query answering, and generative duties.

A. Chains are a sequence of steps which are executed so as. They’re used to outline a selected process or course of. For instance, a series could possibly be used to summarize a doc, reply a query, or generate artistic textual content.

Brokers are extra advanced than chains. They’ll make choices about which steps to execute, and so they also can be taught from their experiences. Brokers are sometimes used for duties that require quite a lot of creativity or reasoning, For instance, information evaluation, and code technology.

A. 1. Motion: Motion brokers determine an motion to take and execute that motion one step at a time. They’re extra standard and appropriate for small duties.

2. Plan-and-execute brokers first determine on a plan of motion to take after which execute these actions one after the other. They’re extra advanced and appropriate for duties that require extra planning and suppleness.

A. Langchain is able to integrating LLMs and chat fashions. LLMs are fashions that take string enter and return a string response. Chat fashions take a listing of chat messages as enter and output a chat message.

A. Sure, Lagchain is an open-source free-to-use software, however most operations would require an OpenAI API key which incurs fees.

The media proven on this article isn’t owned by Analytics Vidhya and is used on the Creator’s discretion.