Introduction

As a knowledge analyst, it’s our duty to make sure knowledge integrity to acquire correct and reliable insights. Information cleaning performs an important function on this course of, and duplicate values are among the many commonest points knowledge analysts encounter. Duplicate values can probably misrepresent insights. Due to this fact, it’s essential to have environment friendly strategies for coping with duplicate values. On this article, we’ll discover ways to establish and deal with duplicate values, in addition to finest practices for managing duplicates.

Figuring out Duplicate Values

Step one in dealing with duplicate values is to establish them. Figuring out duplicate values is a vital step in knowledge cleansing. Pandas presents a number of strategies for figuring out duplicate values inside a dataframe. On this part, we’ll focus on the duplicated() operate and value_counts() operate for figuring out duplicate values.

Usin duplicated()

The duplicated() operate is a Pandas library operate that checks for duplicate rows in a DataFrame. The output of the duplicated() operate is a boolean sequence with the identical size because the enter DataFrame, the place every component signifies whether or not or not the corresponding row is a reproduction.

Let’s think about a easy instance of the duplicated() operate:

import pandas as pd

knowledge = {

'StudentName': ['Mark', 'Ali', 'Bob', 'John', 'Johny', 'Mark'],

'Rating': [45, 65, 76, 44, 39, 45]

}

df = pd.DataFrame(knowledge)

df_duplicates = df.duplicated()

print(df_duplicates)

Output:

0 False

1 False

2 False

3 False

4 False

5 True

dtype: bool

Within the instance above, we created a DataFrame containing the names of scholars and their whole scores. We invoked duplicated() on the DataFrame, which generated a boolean sequence with False representing distinctive values and True representing duplicate values.

On this instance, the primary incidence of the worth is taken into account distinctive. Nevertheless, what if we wish the final worth to be thought of distinctive, and we do not need to think about all columns when figuring out duplicate values? Right here, we will modify the duplicated() operate by altering the parameter values.

Parameters: Subset and Hold

The duplicated() operate presents customization choices by means of its non-obligatory parameters. It has two parameters, as described under:

-

subset: This parameter allows us to specify the subset of columns to think about throughout duplicate detection. The subset is about toNoneby default, that means that every column within the DataFrame is taken into account. To specify column names, we will present the subset with an inventory of column names.Right here is an instance of utilizing the subset parameter:

df_duplicates = df.duplicated(subset=['StudentName'])Output:

0 False 1 False 2 False 3 False 4 False 5 True dtype: bool -

preserve: This selection permits us to decide on which occasion of the duplicate row needs to be marked as a reproduction. The potential values for preserve are:"first": That is the default worth for thepreservepossibility. It identifies all duplicates apart from the primary incidence, contemplating the primary worth to be distinctive."final": This selection identifies the final incidence as a singular worth. All different occurrences might be thought of duplicates.False: This selection labels every occasion as a reproduction worth.

Right here is an instance of utilizing the preserve parameter:

df_duplicates = df.duplicated(preserve='final')

print(df_duplicates)

Output:

0 True

1 False

2 False

3 False

4 False

5 False

dtype: bool

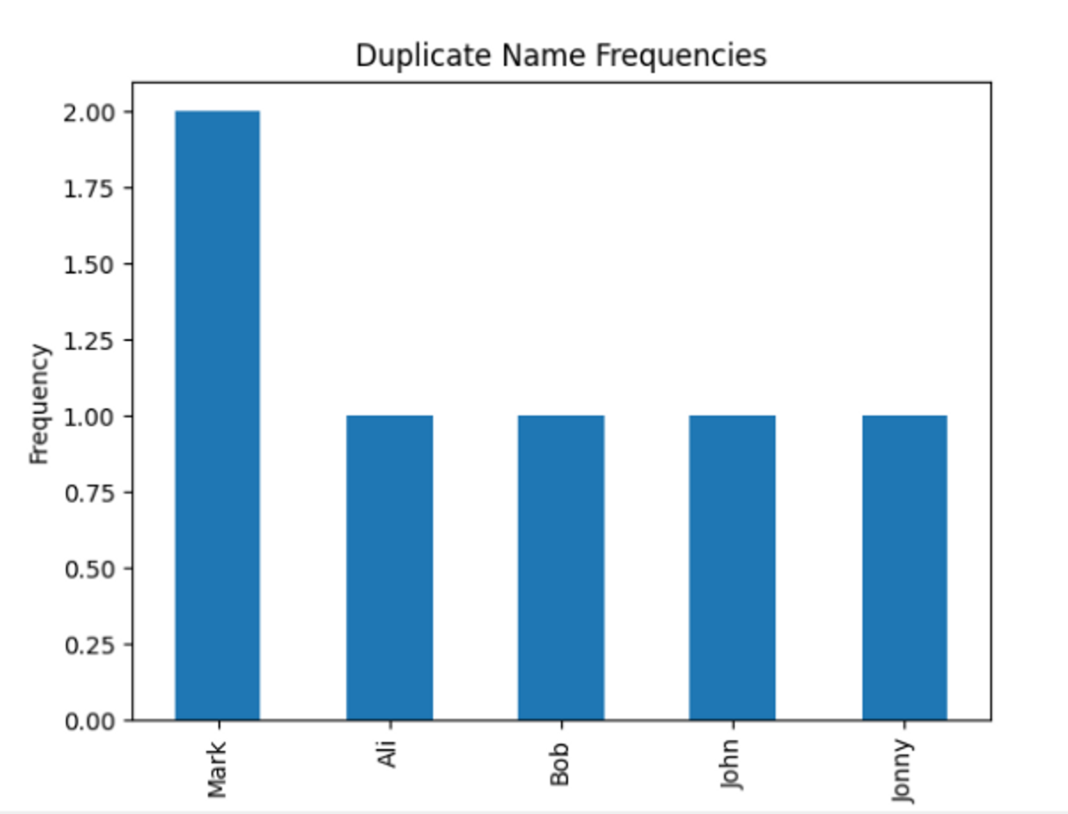

Visualize Duplicate Values

The value_counts() operate is the second method for figuring out duplicates. The value_counts() operate counts the variety of occasions every distinctive worth seems in a column. By making use of the value_counts() operate to a selected column, the frequency of every worth will be visualized.

Right here is an instance of utilizing the value_counts() operate:

import matplotlib.pyplot as plt

import pandas as pd

knowledge = {

'StudentName': ['Mark', 'Ali', 'Bob', 'John', 'Johny', 'Mark'],

'Rating': [45, 65, 76, 44, 39, 45]

}

df = pd.DataFrame(knowledge)

name_counts = df['StudentName'].value_counts()

print(name_counts)

Output:

Mark 2

Ali 1

Bob 1

John 1

Johny 1

Title: StudentName, dtype: int64

Let’s now visualize duplicate values with a bar graph. We are able to successfully visualize the frequency of duplicate values utilizing a bar chart.

name_counts.plot(sort='bar')

plt.xlabel('Pupil Title')

plt.ylabel('Frequency')

plt.title('Duplicate Title Frequencies')

plt.present()

Dealing with Duplicate Values

After figuring out duplicate values, it is time to tackle them. On this part, we’ll discover varied methods for eradicating and updating duplicate values utilizing the pandas drop_duplicates() and substitute() features. Moreover, we’ll focus on aggregating knowledge with duplicate values utilizing the groupby() operate.

Eradicating Duplicate Values

The commonest method for dealing with duplicates is to take away them from the DataFrame. To eradicate duplicate data from the DataFrame, we’ll use the drop_duplicates() operate. By default, this operate retains the primary occasion of every duplicate row and removes the next occurrences. It identifies duplicate values based mostly on all column values; nonetheless, we will specify the column to be thought of utilizing subset parameters.

Syntax of drop_duplicates() with default values in parameters is as follows:

dataFrame.drop_duplicates(subset=None, preserve='first', inplace=False)

The subset and preserve parameters have the identical clarification as in duplicates(). If we set the third parameter inplace to True, all modifications might be carried out straight on the unique DataFrame, ensuing within the methodology returning None and the unique DataFrame being modified. By default, inplace is False.

Right here is an instance of the drop_duplicates() operate:

df.drop_duplicates(preserve='final', inplace=True)

print(df)

Output:

StudentName Rating

1 Ali 65

2 Bob 76

3 John 44

4 Johny 39

5 Mark 45

Try our hands-on, sensible information to studying Git, with best-practices, industry-accepted requirements, and included cheat sheet. Cease Googling Git instructions and really be taught it!

Within the above instance, the primary entry was deleted because it was a reproduction.

Exchange or Replace Duplicate Values

The second methodology for dealing with duplicates entails changing the worth utilizing the Pandas substitute() operate. The substitute() operate permits us to switch particular values or patterns in a DataFrame with new values. By default, it replaces all cases of the worth. Nevertheless, through the use of the restrict parameter, we will prohibit the variety of replacements.

Here is an instance of utilizing the substitute() operate:

df['StudentName'].substitute('Mark', 'Max', restrict=1, inplace=True)

print(df)

Output:

StudentName Rating

0 Max 45

1 Ali 65

2 Bob 76

3 John 44

4 Johny 39

5 Mark 45

Right here, the restrict was used to switch the primary worth. What if we need to substitute the final incidence? On this case, we’ll mix the duplicated() and substitute() features. Utilizing duplicated(), we’ll point out the final occasion of every duplicate worth, acquire the row quantity utilizing the loc operate, after which substitute it utilizing the substitute() operate. Here is an instance of utilizing duplicated() and substitute() features collectively.

last_occurrences = df.duplicated(subset='StudentName', preserve='first')

last_occurrences_rows = df[last_occurrences]

df.loc[last_occurrences, 'StudentName'] = df.loc[last_occurrences, 'StudentName'].substitute('Mark', 'Max')

print(df)

Output:

StudentName Rating

0 Mark 45

1 Ali 65

2 Bob 76

3 John 44

4 Johny 39

5 Max 45

Customized Features for Advanced Replacements

In some circumstances, dealing with duplicate values requires extra intricate replacements than merely eradicating or updating them. Customized features allow us to create particular substitute guidelines tailor-made to our wants. Through the use of the pandas apply() operate, we will apply the customized operate to our knowledge.

For instance, let’s assume the “StudentName” column incorporates duplicate names. Our purpose is to switch duplicates utilizing a customized operate that appends a quantity on the finish of duplicate values, making them distinctive.

def add_number(identify, counts):

if identify in counts:

counts[name] += 1

return f'{identify}_{counts[name]}'

else:

counts[name] = 0

return identify

name_counts = {}

df['is_duplicate'] = df.duplicated('StudentName', preserve=False)

df['StudentName'] = df.apply(lambda x: add_number(x['StudentName'], name_counts) if x['is_duplicate'] else x['StudentName'], axis=1)

df.drop('is_duplicate', axis=1, inplace=True)

print(df)

Output:

StudentName Rating

0 Mark 45

1 Ali 65

2 Bob 76

3 John 44

4 Johny 39

5 Mark_1 45

Combination Information with Duplicate Values

Information containing duplicate values will be aggregated to summarize and acquire insights from the info. The Pandas groupby() operate lets you mixture knowledge with duplicate values. Through the use of the groupby() operate, you possibly can group a number of columns and calculate the imply, median, or sum of one other column for every group.

Here is an instance of utilizing the groupby() methodology:

grouped = df.groupby(['StudentName'])

df_aggregated = grouped.sum()

print(df_aggregated)

Output:

Rating

StudentName

Ali 65

Bob 76

John 44

Johny 39

Mark 90

Superior Strategies

To deal with extra complicated eventualities and guarantee correct evaluation, there are some superior methods that we will use. This part will focus on coping with fuzzy duplicates, duplication in time sequence knowledge, and duplicate index values.

Fuzzy Duplicates

Fuzzy duplicates are data that aren’t precise matches however are comparable, they usually might happen for varied causes, together with knowledge enter errors, misspellings, and variations in formatting. We’ll use the fuzzywuzzy Python library to establish duplicates utilizing string similarity matching.

Right here is an instance of dealing with fuzzy values:

import pandas as pd

from fuzzywuzzy import fuzz

def find_fuzzy_duplicates(dataframe, column, threshold):

duplicates = []

for i in vary(len(dataframe)):

for j in vary(i+1, len(dataframe)):

similarity = fuzz.ratio(dataframe[column][i], dataframe[column][j])

if similarity >= threshold:

duplicates.append(dataframe.iloc[[i, j]])

if duplicates:

duplicates_df = pd.concat(duplicates)

return duplicates_df

else:

return pd.DataFrame()

knowledge = {

'StudentName': ['Mark', 'Ali', 'Bob', 'John', 'Johny', 'Mark'],

'Rating': [45, 65, 76, 44, 39, 45]

}

df = pd.DataFrame(knowledge)

threshold = 70

fuzzy_duplicates = find_fuzzy_duplicates(df, 'StudentName', threshold)

print("Fuzzy duplicates:")

print(fuzzy_duplicates.to_string(index=False))

On this instance, we create a customized operate find_fuzzy_duplicates that takes a DataFrame, a column identify, and a similarity threshold as enter. The operate iterates by means of every row within the DataFrame and compares it with subsequent rows utilizing the fuzz.ratio methodology from the fuzzywuzzy library. If the similarity rating is bigger than or equal to the edge, the duplicate rows are added to an inventory. Lastly, the operate returns a DataFrame containing the fuzzy duplicates.

Output:

Fuzzy duplicates:

StudentName Rating

Mark 45

Mark 45

John 44

Johny 39

Within the above instance, fuzzy duplicates are recognized within the “StudentName” column. The ‘find_fuzzy_duplicates’ operate compares every pair of strings utilizing the fuzzywuzzy library’s fuzz.ratio operate, which calculates a similarity rating based mostly on the Levenshtein distance. We have set the edge at 70, that means that any identify with a match ratio better than 70 might be thought of a fuzzy worth. After figuring out fuzzy values, we will handle them utilizing the strategy outlined within the part titled “Dealing with Duplicates.”

Dealing with Time Sequence Information Duplicates

Duplicates can happen when a number of observations are recorded on the identical timestamp. These values can result in biased outcomes if not correctly dealt with. Listed below are just a few methods to deal with duplicate values in time sequence knowledge.

- Dropping Actual Duplicates: On this methodology, we take away similar rows utilizing the

drop_duplicatesoperate in Pandas. - Duplicate Timestamps with Totally different Values: If we have now the identical timestamp however totally different values, we will mixture the info and acquire extra perception utilizing

groupby(), or we will choose the newest worth and take away the others utilizingdrop_duplicates()with thepreserveparameter set to ‘final’.

Dealing with Duplicate Index Values

Earlier than addressing duplicate index values, let’s first outline what an index is in Pandas. An index is a singular identifier assigned to every row of the DataFrame. Pandas assigns a numeric index beginning at zero by default. Nevertheless, an index will be assigned to any column or column mixture. To establish duplicates within the Index column, we will use the duplicated() and drop_duplicates() features, respectively. On this part, we’ll discover the way to deal with duplicates within the Index column utilizing reset_index().

As its identify implies, the reset_index() operate in Pandas is used to reset a DataFrame’s index. When making use of the reset_index() operate, the present index is mechanically discarded, which implies the preliminary index values are misplaced. By specifying the drop parameter as False within the reset_index() operate, we will retain the unique index worth whereas resetting the index.

Right here is an instance of utilizing reset_index():

import pandas as pd

knowledge = {

'Rating': [45, 65, 76, 44, 39, 45]

}

df = pd.DataFrame(knowledge, index=['Mark', 'Ali', 'Bob', 'John', 'Johny', 'Mark'])

df.reset_index(inplace=True)

print(df)

Output:

index Rating

0 Mark 45

1 Ali 65

2 Bob 76

3 John 44

4 Johny 39

5 Mark 45

Finest Practices

-

Perceive Duplicate Information’s Nature: Earlier than taking any motion, it’s essential to grasp why duplicate values exist and what they symbolize. Establish the basis trigger after which decide the suitable steps to deal with them.

-

Choose an Acceptable Methodology for Dealing with Duplicates: As mentioned in earlier sections, there are a number of methods to deal with duplicates. The tactic you select depends upon the character of the info and the evaluation you purpose to carry out.

-

Doc the Method: It is important to doc the method for detecting duplicate values and addressing them, permitting others to know the thought course of.

-

Train Warning: Every time we take away or modify knowledge, we should be sure that eliminating duplicates doesn’t introduce errors or bias into the evaluation. Conduct sanity assessments and validate the outcomes of every motion.

-

Protect the Authentic Information: Earlier than performing any operation on knowledge, create a backup copy of the unique knowledge.

-

Stop Future Duplicates: Implement measures to stop duplicates from occurring sooner or later. This will embody knowledge validation throughout knowledge entry, knowledge cleaning routines, or database constraints to implement uniqueness.

Remaining Ideas

In knowledge evaluation, addressing duplicate values is a vital step. Duplicate values can result in inaccurate outcomes. By figuring out and managing duplicate values effectively, knowledge analysts can derive exact and vital data. Implementing the talked about methods and following finest practices will allow analysts to protect the integrity of their knowledge and extract helpful insights from it.