A highschool scholar’s task sparks controversy and debates over tutorial honesty within the age of synthetic intelligence. Joshua, a stellar scholar at a small California highschool, discovered himself in sizzling water when TurnItIn’s AI software program flagged his essay for potential AI-assistance.

Within the period of technological development, AI programs like TurnItIn have began to get utilized by academic establishments to scan college students’ work for plagiarism to make sure the authenticity of submissions.

AI detection algorithms are designed to detect patterns and kinds in writing, evaluating them to huge databases of content material and figuring out the possibilities one thing was written with AI.

However this occasion with Joshua’s essay dropped at gentle an unprecedented twist.

Joshua, famend for his articulate and eloquent writing fashion, had submitted an essay which, within the eyes of TurnItIn’s AI, appeared too subtle for a highschool scholar.

Astonishingly, the AI system appeared to have confused the complexity and aptitude of Joshua’s writing with the traits of content material generated by superior AI textual content programs like ChatGPT. This incidence uncovered a doubtlessly essential flaw within the reliance on AI for evaluating tutorial work. It was all launched so quick, what was even behind the software program?

At Joshua’s highschool, as in many faculties nationwide, TurnItIn’s flagging has began to turn into very severe.

This semester, a category at Syracuse College additionally bumped into the identical situation – however on a a lot bigger scale.

A bewildered professor obtained TurnItIn outcomes for a complete class of scholars, indicating extraordinarily excessive possibilities of AI authorship throughout the board. This wasn’t only one or two college students; it was a staggering quantity that raised rapid suspicions relating to the credibility of each TurnItIn’s AI detection algorithm and his very personal college students.

The Syracuse incident added gas to the hearth within the broader dialogue on the constraints and potential biases of AI programs in academic settings. The professor, who was well-acquainted together with his college students’ writing talents, discovered it unbelievable that every one of them had all of a sudden turned to AI for help. Curious and anxious, he determined to research additional.

Because the professor dove into the main points, it turned obvious that the algorithm is perhaps misinterpreting creativity, advanced sentence buildings, and articulate expression as traits of AI-generated content material. Or was it? No one actually knew.

Notably, this was precisely what Joshua had skilled on the opposite facet of the nation. Joshua’s flagged essay set off alarm bells and the administration swiftly acted, probably too swiftly.

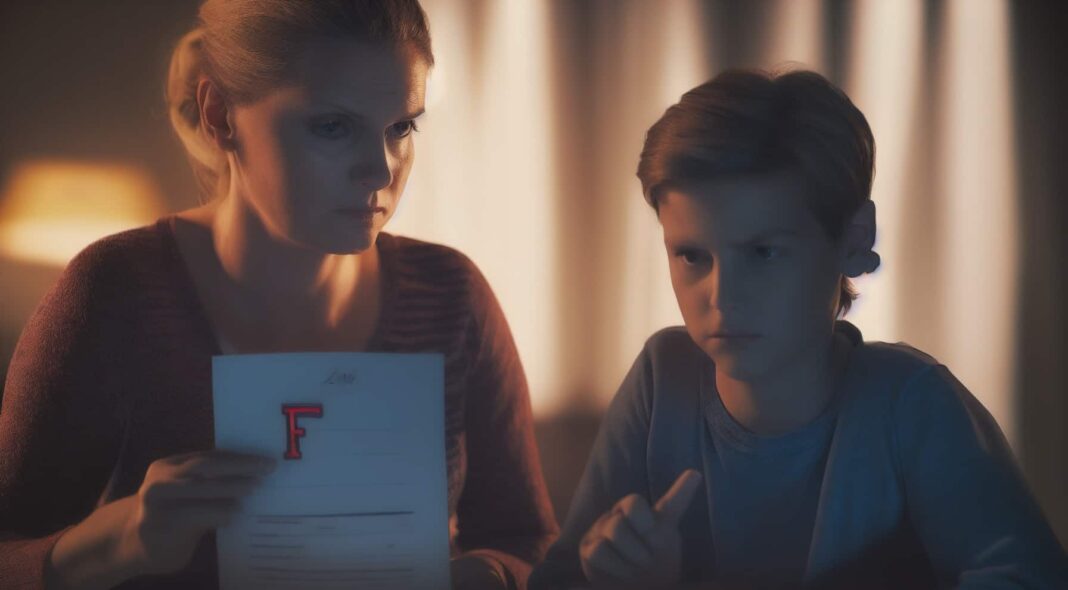

With out a lot deliberation, Joshua was accused of utilizing AI to finish his task. The integrity of his work was known as into query, and the varsity administration, believing the AI detection to be foolproof, initially handed down a extreme punishment. They mandated a 50% deduction on his essay grade and in addition assigned him group service as a type of disciplinary motion!

Joshua, identified for his tutorial prowess, boasts a powerful file. He has been a recipient of the Highest Honor Roll Award and even bagged one of many ten coveted Exemplary Christian awards given to highschool college students. His accolades embody high scholar medals for English, Artwork, Math, a Better of Present award, and a CSF certificates.

However this file was virtually tainted when Joshua’s essay was flagged by TurnItIn. His mom, a relentless advocate for her son’s training, was not prepared to let this go with out a struggle.

She mentioned, “At our preliminary assembly, [the English teacher] mentioned she was ‘shocked and stunned’ that AI flagged Joshua’s paper as a result of he’s such a superb author, however now her story has modified.” After additional evaluation, the instructor claimed to see a distinction in Joshua’s writing. Curiously, the identical instructor talked about she suffers from long-term COVID mind.

The Dean of the varsity, who can also be the soccer coach, admitted to having restricted data of the AI system. TurnItIn provided the varsity a free trial of its AI replace, and the varsity doesn’t at present have a coverage relating to the software program’s use. How is that this truthful to college students getting caught within the crossfire?

This incident raises issues concerning the reliance on AI for educational integrity assessments, particularly when the educators themselves are usually not well-informed concerning the expertise they’re utilizing.

Different college administration members the world over are literally selecting to opt-out of the detection software program, because it’s too early to make academic-life altering selections based mostly on expertise that’s so new.

Concerning Joshua’s, his mom felt no selection however to hunt authorized counsel. The lawyer talked about dealing with the same case with even harsher penalties that was received. Nonetheless, on account of Joshua attending a personal college, the state of affairs differed.

As AI continues to evolve, the incident at Joshua’s highschool serves as a cautionary story. It underscores the significance of understanding the expertise, notably in training, the place younger minds’ futures are at stake.

Joshua’s case highlights that whereas AI is usually a useful gizmo, human judgment stays important. His journey shall be one for the historical past books as society grapples with the challenges of merging AI into training.

Is it a case of AI mistaken or sheer brilliance? For Joshua, this expertise shall be a get up name to himself & hopefully the remainder of the nation as they put together for the way forward for training in an more and more digital world.

In Joshua’s story, we discover a stark reminder that though we’re within the age of AI, we should not enable expertise to overrule our human judgment and discernment.

AI algorithms, as superior as they could be, are usually not infallible. They don’t perceive context, they don’t really feel empathy, and they don’t possess the holistic perspective that people do. The complexity of human mind can typically be mistaken for one thing it isn’t – as seen in Joshua’s case.

As Joshua’s mom properly sought authorized recommendation, the lawyer identified a essential facet – personal faculties are ruled by a special algorithm and laws in comparison with public establishments. This provides one other layer to the talk, making us query how universally relevant AI instruments like TurnItIn could be throughout totally different academic settings.

Are we ready for this new period the place AI intersects with training? Have we outfitted our educators, directors, and college students with the required understanding of those applied sciences? Are our authorized programs able to deal with disputes arising from AI involvement in tutorial issues?

As we applaud Joshua for standing up and difficult the established order, allow us to additionally take a second to introspect. This expertise isn’t just a wake-up name for Joshua, however for all of us. It raises questions concerning the position of expertise in our lives, the transparency of its utility, and the essential significance of human involvement and oversight.

As we propel into the longer term, Joshua’s story serves as a compelling reminder – it is time we not solely query how we use AI, but additionally, how AI makes use of us.

So, as we stand on the cusp of the AI revolution, we should ask ourselves: Are we shaping AI, or is AI shaping us? And on this delicate stability of progress and preservation, who would be the final gatekeeper – man or machine?