Introduction

With the introduction of ChatGPT and the GPT 3 fashions by OpenAI, the world has shifted in direction of utilizing AI-integrated purposes. In all of the day-to-day purposes we use, from e-commerce to banking purposes, AI embeds some elements of the applying, notably the Massive Language Fashions. One amongst them is the OpenAI Assistant API, i.e., chatbots. OpenAI just lately launched Assistants API underneath Beta, a software designed to raise person expertise.

Studying Targets

- Be taught the method of making purpose-built AI assistants with particular directions.

- Discover the idea of persistent and infinitely lengthy threads within the Assistants API.

- Show creating an AI assistant utilizing the OpenAI library, specifying parameters like identify, directions, mannequin, and instruments.

- Be taught the method of making runs to execute AI assistants on particular threads.

- Perceive the pricing construction of the Assistants API, contemplating elements like language mannequin tokens, Code Interpreter classes, and Retrieval software utilization.

This text was revealed as part of the Knowledge Science Blogathon.

What’s Assistants API? What Can It Do?

OpenAI just lately launched the Assistants API, which is at present within the Beta part. This API permits us to construct and combine AI assistants into our purposes utilizing the OpenAI Massive Language Fashions and instruments. Firms tailor these assistants for a particular function and supply them with the related information for that individual use. Examples of this help embrace an AI Climate assistant that offers weather-related info or an AI Journey assistant that solutions Journey-related queries.

These assistants are constructed with statefulness in thoughts. That’s, they maintain the earlier conversations to a big extent, thus making the builders not fear in regards to the state administration and leaving it to the OpenAI. The standard circulation is beneath:

- Creating an Assistant, the place we choose the info to ingest, what mannequin to make use of, directions to the Assistant and what instruments to make use of.

- Then, we create a Thread. A thread shops a person’s messages and the LLM’s replies. This thread is answerable for managing the state of the Assistant and OpenAI takes care of it.

- After creating the Thread, we add messages to it. These are the messages that the person varieties to the AI Assistant or the Assistant replies.

- Lastly, we run the Assistant on that thread. Based mostly on the messages on that thread, the AI Assistant calls the OpenAI LLMs to supply an appropriate response and can also contact some instruments in between, which we’ll focus on within the subsequent part.

All these Assistant, Thread, Message, and Run are known as Objects within the Assistant API. Together with these objects, there may be one other object known as Run Step, which gives us with the detailed steps that the Assistant has taken in Run, thus offering insights into its inside workings.

We now have continuously talked about the phrase software, so what does it need to do with the OpenAI Assistant API? Instruments are like weapons, which permit the Assistant API to do further duties. These embrace the OpenAI-hosted instruments just like the Data Retrieval and the Code Interpreter. We will additionally outline our customized instruments utilizing Perform calling, which we is not going to focus on on this article.

So, let’s undergo the remaining instruments intimately.

- Code Interpreter: This interprets Python code inside a sandboxed setting, enabling the Assistant API to execute customized Python scripts. Helpful for eventualities like information evaluation, the place the Assistant can generate code to investigate CSV information, run it, and supply a person response.

- Perform Calling: Builders can outline customized instruments, permitting the Assistant to iteratively construct responses by using these instruments and their outputs.

- Retrieval: Important for AI Assistants, it includes offering information for evaluation. As an illustration, when creating an Assistant for a product, related info is chunked, embedded, and saved in a vector retailer. The Assistant retrieves this information from the data base when responding to person queries.

Constructing Our Assistant

On this part, we’ll undergo creating an Assistant, including messages to a Thread, and Operating the Assistant on that Thread. We are going to start by downloading the OpenAI library.

# installs the openai library that comprises the Assistants API

!pip set up openaiGuarantee you’re utilizing the newest model (v.1.2.3 is the newest when this text was written). Let’s begin by creating our consumer.

# importing os library to learn setting variables

import os

# importing openai library to work together with Assistants API

from openai import OpenAI

# storing OPENAI API KEY in a setting variable

os.environ["OPENAI_API_KEY"] = "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"

# creating our OpenAI consumer by offering the API KEY

consumer = OpenAI(api_key = os.environ['OPENAI_API_KEY'])

Creating an Assistant

So we import the OpenAI class from the openai library. Then, we retailer our OpenAI API Token within the setting variable. After which, instantiate an OpenAI class with the api_key because the variable. The consumer variable is the occasion of the OpenAI class. Now it’s time to create our assistant.

# creating an assistant

assistant = consumer.beta.assistants.create(

identify="PostgreSQL Expret",

directions="You're a PostgreSQL knowledgeable and may reply any query in a

easy method with an instance",

mannequin="gpt-4-1106-preview",

instruments=[{"type":"retrieval"}]

)

- To create an Assistant, we name the create() technique from the assistants class and go it the next parameters

- identify: That is the identify of our Assistant. On this instance, we name it the PostgresSQL Skilled

- directions: That is the context/further info given to the Assistant.

- mannequin: The mannequin that the assistant will use to generate the responses. On this case, we’re utilizing a newly launched GPT-4 preview mannequin

- instruments: These are the instruments we mentioned within the earlier part, which the Assistant will use to generate responses. We go the instruments as a listing of dictionaries. Right here, we’re utilizing the retrieval software for ingesting the info

Load the Paperwork

Thus, we created and assigned an assistant to the variable assistant. The following step will probably be loading the paperwork.

# add the file

file = consumer.information.create(

file=open(

"/content material/LearnPostgres.pdf",

"rb",

),

function="assistants",

)

# replace Assistant

assistant = consumer.beta.assistants.replace(

assistant.id,

file_ids=[file.id],

)- The above is code to create and ingest a doc to the Assistant. Right here, I’ve a PDF (click on right here to obtain the PDF)that comprises studying details about PostgreSQL.

- We use the information class of the OpenAI to create a file out of it utilizing the create() technique and go in “assistants” as a worth to the aim variable as this must be added to the Assistant.

- Within the second step, we replace our Assistant with the created file. For this, we name within the replace() technique and go within the Assistant ID (we’ve got already created an Assistant object, and this object comprises a singular ID) and the file IDs (every file created may have its distinctive ID).

As we’ve got included the retrieval software in our Assistant configuration, it takes care of chunking our LearnPostres.pdf, changing it into embeddings, and retrieving the related info from it.

Making a Thread and Storing Messages

On this part, we’ll create a Thread and add Messages to it. We are going to begin by creating a brand new Thread.

# making a thread

thread = consumer.beta.threads.create()The create() technique of the threads class is used to create a Thread. A Thread represents a dialog session. Just like assistants, the Thread object may even have a singular ID related to it. Additionally, be aware that we’ve got not handed any Assitant ID to it, implying that the Thread is remoted and never coupled with the assistant. Now, let’s add a message to our newly created Thread.

# including our first message to the thread

message = consumer.beta.threads.messages.create(

thread_id=thread.id,

function="person",

content material="Find out how to create a desk in PostgreSQL"

)- Messages are the blocks of dialog containing the person queries and responses. So as to add a message to a Thread, we use the create() technique of the messages class, and it takes the next parameters

- thread_id: A singular ID that’s related to every created Thread. Right here, we go within the thread ID of the Thread we began earlier.

- function: Who’s the sender / sending this message? In our case, it’s the person.

- content material: That is the person’s question. In our instance, we give it “Find out how to create a desk in PostgreSQL.”

Operating Our Assistant

Now, we run the Assistant by making a Run on the Thread that we wish our Assistant to run on.

# Creating an Run

run = consumer.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant.id

)- To create a Run, we use the create() technique of the runs class. And go it the Thread ID, that’s, on which Thread(that’s, which dialog session) we wish the Assistant to run and the Assistant ID(that’s, which Assistant to Run) and go it to the run variable.

- Making a Run is not going to produce the response. As a substitute, we have to wait till the Run is accomplished. A Run will be thought of an Asynchronous activity, the place we should ballot the Run whether or not it has completed working.

Making a Perform

The Object gives a variable known as standing, which comprises whether or not a selected Run is queued / in-progress / accomplished. For this, we create the next operate.

import time

# making a operate to verify whether or not the Run is accomplished or not

def poll_run(run, thread):

whereas run.standing != "accomplished":

run = consumer.beta.threads.runs.retrieve(

thread_id=thread.id,

run_id=run.id,

)

time.sleep(0.5)

return run

# ready for the run to be accomplished

run = poll_run(run, thread)- Right here, we create a operate known as poll_run(). This operate takes in our Run and Thread objects that we created earlier.

- A Run is claimed to be processed when its standing is “accomplished.” Therefore, we ballot the run to verify its standing if it has been accomplished or not in a loop.

- On this loop, we name the retrieve() technique from the runs class and go it the Run ID and Thread ID. The retrieve technique will return the identical Run object with an up to date standing.

- Now, we verify whether or not the standing has been up to date to finish by retrieving the Run object each 0.5 seconds and polling its completion.

- If the Run standing turns into accomplished, then we return the Run.

On this Run step, the Assistant we’ve got created will make the most of the Retrieval software to retrieve the related info associated to the person question from the out there information name within the mannequin we specified with the relevant information and generate a response. This generated response will get saved within the Thread. Now, our thread has two messages: one is the person question, and the opposite is the Assistant response.

Find out how to Retailer Messages within the Thread?

Let’s get the messages saved in our Thread.

# extracting the message

messages = consumer.beta.threads.messages.checklist(thread_id=thread.id)

for m in messages:

print(f"{m.function}: {m.content material[0].textual content.worth}")- In step one, we extract all of the messages within the Thread by calling within the checklist() technique of the messages class. To this, we go the Thread ID to extract the messages of the Thread we wish

- Subsequent, we loop via all of the messages within the checklist and print them out. The message we’ve got uploaded to the Thread and the messages generated by the Assistant are saved within the message.content material[0].textual content.worth of the message object

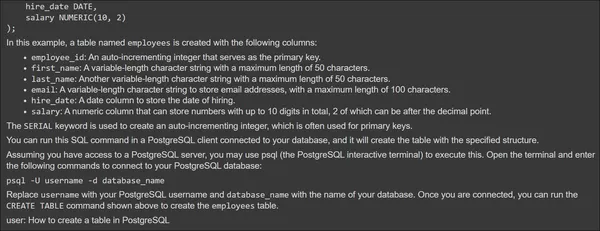

The above picture exhibits the Assistant Response on the prime and Person Question on the backside. Now, let’s give a second message and check if the Assistant can entry the earlier dialog.

# creating second message

message2 = consumer.beta.threads.messages.create(

thread_id=thread.id,

function="person",

content material="Add some worth to the desk you have got created"

)

# creating an Run

run = consumer.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant.id

)

# ready for the Run technique to finish

run = poll_run(run, thread)

# extracting the message

messages = consumer.beta.threads.messages.checklist(thread_id=thread.id)

for m in messages:

print(f"{m.function}: {m.content material[0].textual content.worth}")- Right here, we create one other message known as message2, and this time, we’re passing the question “Add some worth to the desk you have got created.” This question refers back to the earlier dialog and thus passes it to our Thread.

- Then we undergo the identical course of of making a Run, Operating it with the Assistant, and polling it.

Lastly, we’re retrieving and printing all of the messages from the Thread.

The Assistant may certainly entry the data from the earlier dialog and likewise use the Retrieval software to generate a response for the question supplied. Thus, via the OpenAI Assistant API, we are able to create customized Assistants after which combine them in any type in our purposes. OpenAI can also be planning to launch many instruments that the Assistant API can use.

Assistant API Pricing

The Assistant API is billed based mostly on the mannequin chosen and the instruments used. The instruments just like the Retrieval and the Code Interpreter every have a separate price of their very own. Beginning with the mannequin, every mannequin within the OpenAI has their separate price based mostly on the variety of tokens used for the enter and the variety of output tokens generated. So, for the Mannequin pricing, click on right here to verify all of the OpenAI Massive Language Mannequin costs.

Concerning the Code Interpreter, it’s priced at $0.03 per session, with classes energetic for one hour by default. One session is related to one Thread, so you probably have N Threads working, the associated fee will probably be N * $0.03.

Then again, Retrieval comes with a pricing of $0.20 per GB per assistant per day. If N Assistants entry this Device, will probably be priced N * $0.2 / Day. The variety of Threads doesn’t have an effect on the retrieval pricing.

Knowledge Privateness and Moral Issues

Concerning the Moral Issues and the usage of Person Knowledge, OpenAI places Knowledge Privateness on prime of the whole lot. The person would be the sole proprietor of the info despatched to the OpenAI API and information obtained from it. Additionally, OpenAI doesn’t prepare on the person information, and the info’s longevity is within the person’s management. Even the customized fashions skilled in OpenAI solely belong to the person who has created them.

OpenAI follows strict compliance. It permits information encryption at relaxation (by way of AES-256) and through transit (TLS 1.2+). OpenAI has been audited for SOC 2 compliance, that means that OpenAI places rigorous efforts into information privateness and safety. OpenAI permits strict entry management of who can entry the info throughout the group.

Conclusion

The OpenAI Assistants API provides a brand new strategy for creating and integrating AI assistants into purposes. These are the assistants developed to deal with a particular function/activity. As we’ve explored the functionalities and instruments of this API, together with the code interpreter, retrieval, and the creation of customized instruments, it turns into evident that builders now have a strong arsenal. The stateful nature of assistants, managed seamlessly via Threads, reduces the burden on builders, permitting them to deal with creating tailor-made AI experiences.

Key Takeaways

- The Assistants API permits the event of purpose-built AI assistants tailor-made for particular duties.

- Together with instruments like Code Interpreter and Retrieval broadens the scope of what AI assistants can accomplish, from working customized Python code to accessing exterior data.

- The API introduces persistent and infinitely lengthy threads, simplifying state administration and offering flexibility for builders.

- The Threads and Assistants are remoted, permitting builders to make the most of duplicate Threads and run different Assistants.

- The Perform Calling Device permits builders to make use of customized instruments that the Assistant can use via the response technology course of.

Regularly Requested Questions

A. OpenAI Assistants API is a software for builders to create and combine Assistants for particular utility duties.

A. The important thing objects embrace Assistant, Threads, Messages, Run, and Run Steps. These are the objects vital for creating a particular Assistant from begin to end.

A. Runs can have statuses corresponding to queued, in-progress, accomplished, requires_action, expired, canceling, canceled, and failed. We now have to ballot the Run object to verify if it’s completed or not.

A. The info ingestion is taken care of by the OpenAI Retrieval Device. This software takes information of various codecs, chunks them, and transforms them into embeddings. Whereas querying, it can retrieve the related info associated to the person’s question.

A. The Assistants API at present helps Retrieval and Code Interpreter Instruments. It additionally consists of Perform Calling, which permits the builders to create their very own instruments for the assistant.

References

Listed here are the official documentation concerning the OpenAI Assistants API

- https://platform.openai.com/docs/assistants/overview

- https://platform.openai.com/docs/api-reference/assistants

The media proven on this article just isn’t owned by Analytics Vidhya and is used on the Writer’s discretion.