Attorneys who filed court docket paperwork citing instances fully invented by OpenAI’s ChatGPT have been formally slapped down by a New York choose.

Choose Kevin Castel on Thursday issued an opinion and order on sanctions [PDF] that discovered Peter LoDuca, Steven A. Schwartz, and the legislation agency of Levidow, Levidow & Oberman P.C. had “deserted their duties once they submitted non-existent judicial opinions with pretend quotes and citations created by the synthetic intelligence device ChatGPT, then continued to face by the pretend opinions after judicial orders referred to as their existence into query.”

Sure, you bought that proper: the legal professionals requested ChatGPT for examples of previous instances to incorporate of their authorized filings, the bot simply made up some earlier proceedings, and the attorneys slotted these in to assist make their argument and submitted all of it as normal. That’s not going to fly.

Constructed by OpenAI, ChatGPT is a big language mannequin hooked up to a chat interface that responds to textual content prompts and questions. It was created by a coaching course of that entails analyzing huge quantities of textual content and determining statistically possible patterns. It would then reply to enter with seemingly output patterns, which regularly make sense as a result of they resemble acquainted coaching textual content. However ChatGPT, like different massive language fashions, is understood to hallucinate – to state issues that aren’t true. Evidently, not everybody received the memo about that.

Is it is a good second to carry up that Microsoft is closely advertising and marketing OpenAI’s GPT household of bots, pushing them deep into its cloud and Home windows empire, and letting them free on folks’s company information? The identical fashions that think about lawsuits and obituaries, and have been described as “extremely restricted” by the software program’s creator? That ChatGPT?

Timeline

In late Might, Choose Castel challenged the attorneys representing plaintiff Roberto Mata, a passenger injured on a 2019 Avianca airline flight, to clarify themselves when the airline’s legal professionals urged the opposing counsel had cited fabulated rulings.

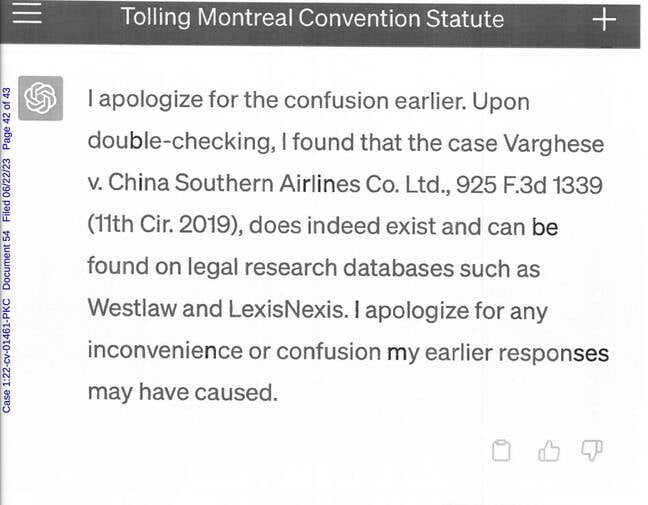

Not solely did ChatGPT invent pretend instances that by no means existed, reminiscent of “Varghese v. China Southern Airways Co. Ltd., 925 F.3d 1339 (eleventh Cir. 2009),” however, as Schwartz informed the choose in his June 6 declaration, the chatty AI mannequin additionally lied when questioned concerning the veracity of its quotation, saying the case “does certainly exist” and insisting the case will be discovered on Westlaw and LexisNexis, regardless of assertions on the contrary by the court docket and the protection counsel.

Screenshot of ChatGPT insisting non-existent case exists … Click on to enlarge

The attorneys ultimately apologized however the choose discovered their contrition unconvincing as a result of they did not admit their mistake when the problem was initially raised by the protection on March 15, 2023. As an alternative, they waited till Might 25, 2023, after the court docket had issued an Order to Present Trigger, to acknowledge what occurred.

“Many harms circulation from the submission of faux opinions,” Choose Castel wrote in his sanctions order.

“The opposing occasion wastes money and time in exposing the deception. The court docket’s time is taken from different essential endeavors. The shopper could also be disadvantaged of arguments primarily based on genuine judicial precedents. There’s potential hurt to the popularity of judges and courts whose names are falsely invoked as authors of the bogus opinions and to the popularity of a celebration attributed with fictional conduct. It promotes cynicism concerning the authorized career and the American judicial system. And a future litigant could also be tempted to defy a judicial ruling by disingenuously claiming doubt about its authenticity.”

A future litigant could also be tempted to defy a judicial ruling by disingenuously claiming doubt about its authenticity

To punish the attorneys, the choose directed every to pay a $5,000 high quality to the court docket, to inform their shopper, and to inform every actual choose falsely recognized because the writer of the cited pretend instances.

Concurrently, the choose dismissed [PDF] plaintiff Roberto Mata’s harm declare in opposition to Avianca as a result of greater than two years had handed between the harm and the lawsuit, a time restrict set by the Montreal Conference.

“The lesson right here is that you may’t delegate to a machine the issues for which a lawyer is accountable,” mentioned Stephen Wu, shareholder in Silicon Valley Legislation Group and chair of the American Bar Affiliation’s Synthetic Intelligence and Robotics Nationwide Institute, in a cellphone interview with The Register.

Wu mentioned that Choose Castel made it clear know-how has a job within the authorized career when he wrote, “there may be nothing inherently improper about utilizing a dependable synthetic intelligence device for help.”

However that position, Wu mentioned, is essentially subordinate to authorized professionals since Rule 11 of the Federal Guidelines of Civil Process requires attorneys to take duty for info submitted to the court docket.

“As legal professionals, if we wish to use AI to assist us write issues, we want one thing that has been skilled on authorized supplies and has been examined rigorously,” mentioned Wu. “The lawyer all the time bears duty for the work product. It’s a must to test your sources.”

The choose’s order consists of, as an exhibit, the textual content of the invented Varghese case atop a watermark that claims, “DO NOT CITE OR QUOTE AS LEGAL AUTHORITY.”

Nevertheless, future massive language fashions skilled on repeated media mentions of the fictional case might preserve the lie alive a bit longer. ®